Introduction

When working with Microservice Architectures, one has to deal with concerns like Service Registration and Discovery, Resilience, Invocation Retries, Dynamic Request Routing and Observability. Netflix pioneered a lot of frameworks like Eureka, Ribbon and Hystrix that enabled Microservices to function at scale addressing the mentioned concerns. Below is an example communication between two services written in Spring Boot that utilizes Netflix Hystrix and other components for resiliency, observability, service discovery, load balancing and other concerns.While the above architecture does solve some problems around resiliency and observability it still is not ideal:

a. The solution does not apply for a Polyglot service environment as the libraries mentioned are catering to a Java stack. For example, one would need to find/build libraries for Node.js to participate in service discovery and support observability.

b. The service is burdened with 'communication specific concerns' that it really should not have to deal with like service registration/discovery, client side load balancing, network retries and resiliency.

c. It introduces a tight coupling with the said technologies around resiliency, load balancing, discovery etc making it very difficult to change in the future.

d. Due to the many dependencies in the libraries like Ribbon, Eureka and Hystrix, there is an invasion of your application stack. If interested, I discussed this in my BLOG around: Shared Service Clients

Sidecar Proxy

Instead of the above, what if you could do the following where a Side Car is introduced that takes on all the responsibilities around Resiliency, Registration, Discovery, Load Balancing, Reporting client metrics and Telemetry etc?:The benefit of the above architecture is that it facilitates the existence of a Polyglot Service environment with low friction as a majority of the concerns around networking between services are abstracted away to a side car thus allowing the service to focus on business functionality. Lyft Envoy is a great example of a Side car Proxy (or Layer 7 Proxy) that provides resiliency and observability to a Microservice Architecture.

Service Mesh

"A service mesh is a dedicated infrastructure layer for handling service-to-service communication. It’s responsible for the reliable delivery of requests through the complex topology of services that comprise a modern, cloud native application. In practice, the service mesh is typically implemented as an array of lightweight network proxies that are deployed alongside application code, without the application needing to be aware. (But there are variations to this idea, as we’ll see.)" - Buoyant.ioIn a Microservice Architecture deployed on a Cloud Native Model, one would deal with 100s of services each running multiple instances of them with instances being created and destroyed by the orchestrator. Having the common cross cutting functionalities like Circuit Breaking, Dynamic routing, Service Discovery, Distributed Tracing, Telemetry being managed by an abstracted into a Fabric with which services communicate appears the way forward.

Istio and Linkerd are two Service meshes that I have played with and will share a bit about them.

Linkerd

Linkerd is an open source service mesh by Buoyant developed primarily using Finagle and netty. It can run on Kubernetes, DC/OS and also a simple set of machines.

Linkerd service mesh, offers a number of features like:

Linkerd service mesh, offers a number of features like:

- Load Balancing

- Circuit Breaking

- Retries and Deadlines

- Request Routing

It instruments top line service metrics like Request Volume, Success Rates and Latency Distribution. With its Dynamic Request Routing, it enables Staging Services, Canaries, Blue Green Deploys with minimal configuration with a powerful language called DTABs.

There are a few ways that Linkerd can be deployed in Kubernetes. This blog will focus on how Linkerd is deployed as a Kubernetes Daemon Set, running a pod on each node of the cluster.

Istio

Istio (Greek for Sail) is an open platform sponsored by IBM, Google and Lyft that provides a uniform way to connect, secure, manage and monitor Microservices. It supports Traffic Shaping between micro services while providing rich telemetry.

Of note:

- Fine grained control of traffic behavior with routing rules, retires, failover and fault injection

- Access Control, Rate Limits and Quota provisioning

- Metrics and Telemetry

- A Data Plane of Envoy Sidecars that mediate all traffic between services

- A Control Plane whose purpose is to manage and configure proxies to route and enforce traffic policies.

Product-Gateway Example

The Product Gateway that I have used in many previous posts is used here as well to demonstrate Service Mesh. The one major difference is that the service uses GRPC instead of REST. From a protocol perspective a HTTP 1.X REST call is made to /product/{id} of the Product gateway service. The Product Gateway service then fans to base product, inventory, price and reviews using GRPC to obtain the different data points that represents the end Product. Proto schema elements from the individual services are used to compose the resulting Product proto. The gateway example is used for the Linkerd and Isitio examples. The project provided does not explore all the features of the service mesh but instead gives you enough of an example to try Istio and Linkerd with GRPC services using Spring Boot.The code for this example is available at: https://github.com/sanjayvacharya/sleeplessinslc/tree/master/product-gateway-service-mesh

You can import the project into your favorite IDE's and run each of the Spring Boot services. Invoking http://localhost:9999/product/931030.html.will call the Product gateway which will then invoke the other service using GRPC to finally return back a minimalistic product page.

For running the project on Kubernetes, I had installed Minikube on my Mac. Ensure that you can dedicate sufficient memory to your Minikube instance. I did not set up a local Docker registry but chose to use my local docker images. Stack Overflow has a good posting on how to use local docker images. In the Kubernetes deployment descriptors, you will notice that for the product gateway images, the settings for imagePullPolicy are set to Never. Before you proceed, to be able to use the Docker Daemon, ensure that you have executed:

>eval $(minikube docker-env)

Install the Docker images for the different services of the project by executing the below from the root folder of the project:

>mvn install

Linkerd

In the sub-folder titled linkerd there are a few yaml files that are available. The linkerd-config.yaml will set up linkerd and define the routing rules:

>kubctl apply -f ./linkerd-config.yaml

Once the above is completed you can access the Linkerd Admin application by doing the following:

>ADMIN_PORT=$(kubectl get svc l5d -o jsonpath='{.spec.ports[?(@.name=="admin")].nodePort}')

>open http://$(minikube ip):$ADMIN_PORT

The next step is to install the different product gateway services. The Kubernetes definitions for these services are present in the product-linkerd-grpc.yaml file.

>kubectl apply -f ./product-linkerd-grpc.yaml

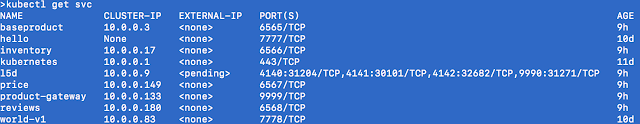

Wait for a bit for Kubernetes to spin up the different pods and services. You should be able to execute 'kubectl get svc' and see something like the below showing the different services up:

We can now execute a call on the product-gateway and see it invoke the other services via the service mesh. Execute the following:

>HOST_IP=$(kubectl get po -l app=l5d -o jsonpath="{.items[0].status.hostIP}")

>INGRESS_LB=$HOST_IP:$(kubectl get svc l5d -o 'jsonpath={.spec.ports[2].nodePort}')

>http_proxy=$INGRESS_LB curl -s http://product-gateway/product/9310301

The above is also demonstrating linkerd as a HTTP proxy. With the http_proxy set, curl sends the proxy request to linkerd which will then lookup product-gateway via service discovery and route the request to an instance.

The above call should result in a JSON representation of the Product as shown below:

{"productId":9310301,"description":"Brio Milano Men's Blue and Grey Plaid Button-down Fashion Shirt","imageUrl":"http://ak1.ostkcdn.com/images/products/9310301/Brio-Milano-Mens-Blue-GrayWhite-Black-Plaid-Button-Down-Fashion-Shirt-6ace5a36-0663-4ec6-9f7d-b6cb4e0065ba_600.jpg","options":[{"productId":1,"optionDescription":"Large","inventory":20,"price":39.99},{"productId":2,"optionDescription":"Medium","inventory":32,"price":32.99},{"productId":3,"optionDescription":"Small","inventory":41,"price":39.99},{"productId":4,"optionDescription":"XLarge","inventory":0,"price":39.99}],"reviews":["Very Beautiful","My husband loved it","This color is horrible for a work place"],"price":38.99,"inventory":93}

You can subsequently install linkerd-viz, a monitoring application based of Prometheus and Grafana that will provide metrics from Linkerd. There are three main categories of metrics that are visible on the dashboard, namely, Top Line (Cluster Wide success rate and request volume), Service Metrics (A section for each service deployed on success rate, request volume and latency) and Per-instance metrics (Success rate, request volume and latency for every node in the cluster).

>kubectl apply -f ./linkerd-viz.yamlWait for a bit for the pods to come up and then you can view the Dashboard by executing:

>open http://$HOST_IP:$(kubectl get svc linkerd-viz -o jsonpath='{.spec.ports[0].nodePort}')

The dashboard will show you metrics around Top line service metrics and also metrics around individual monitored services as shown in the screen shots below:

You could also go ahead and install linkerd-zipkin to capture tracing data.

Istio

The Istio page on installation is pretty thorough. There are a few options you are presented with for installation on the installation page. You should select which ones make sense for your deployment. For my demonstration case, I selected the following

>kubectl apply -f $ISTIO_HOME/install/kubernetes/istio-rbac-beta.yaml >kubectl apply -f $ISTIO_HOME/install/kubernetes/istio.yaml

You can install metrics support by installing Prometheus, Grafana and Service Graph.

>kubectl apply -f $ISTIO_HOME/install/kubernetes/addons/prometheus.yaml >kubectl apply -f $ISTIO_HOME/install/kubernetes/addons/grafana.yaml >kubectl apply -f $ISTIO_HOME/install/kubernetes/addons/servicegraph.yaml

Install the product-gateway artifacts using the below command which uses istioctl kube-inject to automatically inject Envoy Containers in the different pods:

>kubectl apply -f <(istioctl kube-inject -f product-grpc-istio.yaml)

>export GATEWAY_URL=$(kubectl get po -l istio=ingress -o 'jsonpath={.items[0].status.hostIP}'):$(kubectl get svc istio-ingress -o 'jsonpath={.spec.ports[0].nodePort}')

If your configuration is deployed correctly, it should resemble something like the below:

You can now view a HTML version of the product by going to http://${GATEWAY_URL}/product/931030.html to see a primitive product page or access a JSON representation by going to http://${GATEWAY_URL}/product/931030

Ensure you have set the ServiceGraph and Grafana port-forwarding set as described in the Installation instructions and also shown below:

>kubectl port-forward $(kubectl get pod -l app=grafana -o jsonpath='{.items[0].metadata.name}') 3000:3000 &

>kubectl port-forward $(kubectl get pod -l app=servicegraph -o jsonpath='{.items[0].metadata.name}') 8088:8088 &

To see the service graph and Istio Viz dashboard, you might want to send some traffic to the service.

>for i in {1..1000}; do echo -n .; curl -s http://${GATEWAY_URL}/product/9310301 > /dev/null; done

You should be able to access the Istio-Viz dashboard for Topline and detailed service metrics like shown below at http://localhost:3000/dashboard/db/istio-dashboard:

Similarly you can access the Service graph via at the address: http://localhost:8088/dotviz to see a graph similar to the one shown below depicting service interaction:

Conclusion & Resources

A service mesh architecture appears the way forward for Cloud Native deployments. The benefits provided by the mesh does not need any re-iteration. Linkerd is more mature when compared to Istio but Istio although newer, has the strong backing of IBM, Google and Lyft to take it foward. How do they compare with each other? The BLOG post by Abhishek Tiwari comparing Linkerd and Istio features is a great read on the topic of service mesh and comparisons. Alex Leong has a nice youtube presentation on Linkerd that is a good watch. Kelsey Hightower has a nice example driven presentation on Istio.

2 comments:

Awesome blog Sanjay!

Thanks Arnab :-)

Post a Comment